Introduction: The AI Explosion—Convenience vs. Security

Artificial Intelligence (AI) has woven itself into every corner of our daily lives. From voice assistants and predictive text to personalised shopping recommendations, AI is the invisible hand guiding much of the modern digital experience. By 2030, AI is expected to contribute a staggering $15.7 trillion to the global economy (PwC), yet the average consumer remains worryingly unaware of the privacy risks lurking behind this technological revolution.

At Warp Technologies, we see first-hand how enterprise AI is protected by cutting-edge security protocols, while everyday consumers are left exposed. This widening AI security gap raises urgent questions about digital rights, privacy, and the future of human-AI interaction.

The Corporate Fortress vs. The Consumer Wild West

In the corporate world, AI operates inside a fortress. Think:

- End-to-end encryption

- Federated learning models

- Zero-trust architectures

- Data governance frameworks

According to IBM’s Cost of a Data Breach Report (2024), organisations with AI-driven security reduced breach costs by $1.76 million on average. Businesses understand that without robust security, AI can quickly become a liability rather than an asset.

Contrast this with the consumer experience:

- Asking Alexa to remember your shopping list

- Sharing personal details with ChatGPT or ChatLLM

- Using AI-enhanced apps without reading the fine print

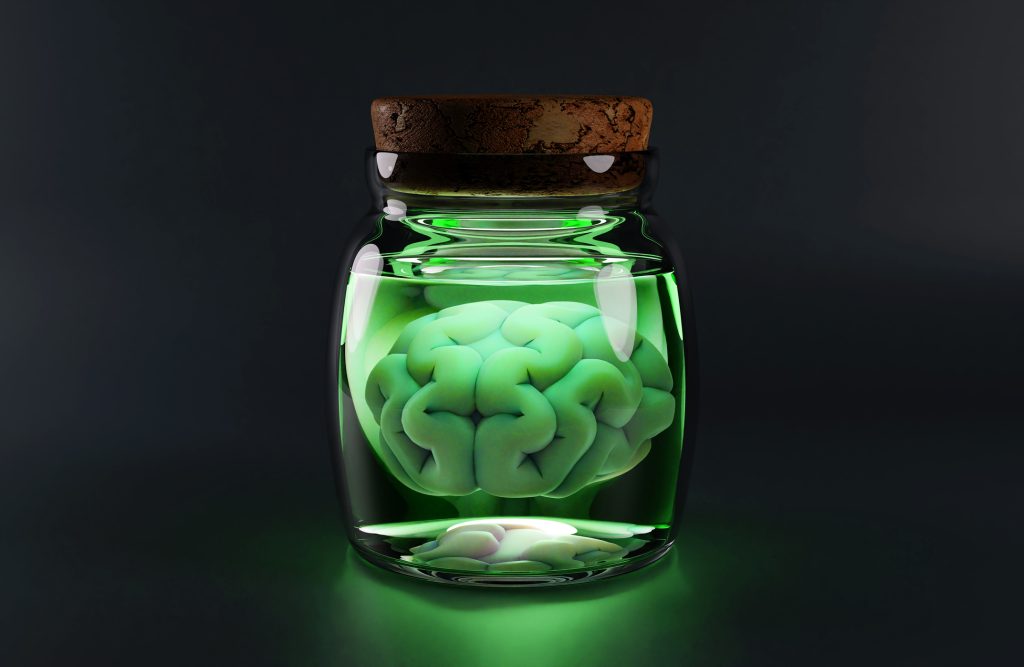

A recent study by Pew Research found that 79% of Americans are concerned about how companies use their data—yet few take action to protect themselves. Consumers are, in essence, digital “brains in jars”: fully conscious, utterly reliant, and worryingly exposed.

The Privacy Paradox: Why We Keep Walking Into The Convenience Trap

AI thrives on intimacy. The more it knows—your behaviours, moods, preferences—the better it serves you. But this hyper-personalisation comes at a hidden cost:

- Your voice samples become training data.

- Your location history fuels behavioural predictions.

- Your online habits build a psychological profile.

According to McKinsey, over 71% of consumers now expect companies to deliver personalised interactions. The pressure to meet this demand drives ever deeper data collection, often at the expense of privacy.

The Unseen Risks: From Friendly Chatbots to Surveillance Engines

The same AI capabilities that help businesses forecast markets can:

- Infer your mental health status

- Predict your political leanings

- Identify financial vulnerabilities

These are not theoretical risks. A Stanford University study demonstrated that AI models could predict political ideology from social media profiles with 85% accuracy.

The question is no longer “Is this happening?” but “Who has access to this data—and to what end?”

Bridging the Gap: How Businesses Lead the Way in AI Security

At Warp Technologies, we help businesses design AI solutions that don’t just innovate but also protect:

- Transparent algorithms

- Rigorous data governance

- Ethical AI design

Our AI Consulting Services help organisations strike the right balance between innovation and security—because transformation without protection is simply disruption in disguise.

A Call for Digital Rights: Why Consumer Protections Matter

If AI truly is the future, that future must be secure for everyone—not just those with enterprise budgets. Here’s what needs to change:

- Mandatory Transparency: Clear disclosures about data usage.

- Universal Privacy Protections: Consumer-grade AI should meet the same standards as enterprise AI.

- Regulation & Enforcement: Stronger legislation like the EU AI Act and UK’s AI White Paper must become the norm.

As Gartner predicts, 80% of AI projects will remain “alchemy” through 2026 without proper governance.

Conclusion: The Future of AI Must Be Safe, Fair, and Transparent

AI is here to stay. It will power businesses, entertain us, assist us, and—yes—learn from us. But it must do so on terms that respect privacy and security for all.

At Warp Technologies, we believe in an AI-driven future that is both brilliant and safe.

What are your thoughts? Are we asking the right questions about AI privacy? Share your views and help shape the conversation.

Original inspiration from Andre Jay: Brain in a Jar

Further Reading:

- PwC Global AI Study

- IBM Cost of a Data Breach Report 2024

- Pew Research: Privacy Concerns

- McKinsey: Personalization Research

- Stanford AI & Ideology Study

- Gartner AI Governance Predictions

Andre Jay is Director of Technology at Warp Technologies. With over 20 years of experience in enterprise transformation, AI strategy, and cybersecurity, he leads efforts to bridge the gap between cutting-edge AI innovation and robust security practices.